When optimization goes wrong: Caught in Mammon’s Grasp

We return to my metaphor for maloptimization, or optimization failure, to describe different ways optimization processes often go wrong.

Analogies are fundamental to how humans navigate abstractions. Mammon is my name for the tendency of technology, social policy, and other goal-oriented human action to give rise to seemingly paradoxical and unintended consequences. These unintended consequences can be thought of as optimization failures or “maloptimization.”

What is maloptimization?

We briefly defined maloptimization in a previous post where I first introduced Mammon. Borrowing wisdom from Aristotle, who identified the mindless pursuit of wealth as capable of distorting goals, I shared his critique of "wealth-getting" as a proto-example of optimization failure. Maloptimization is the tendency for an optimizing process or system to produce unintended outcomes. This can happen for two key reasons:

- Goal displacement: Goal displacement occurs when a metric or goal-oriented activity is no longer producing intended outcomes because it has displaced the underlying motivation driving the process in the first place.

- Overoptimization: Sometimes a planner can have the right metric in mind but go too far in relying on that metric.

These two failure modes aren’t mutually exclusive, but they are two distinct things. I call these the left and right hands of Mammon, respectively. In this post we’re going to cover a variety of examples that illustrate both principles in action.

The left hand of Mammon: Goal displacement via suboptimization and “mis-optimization”

There are instances where an optimization process can lead to the “wrong” thing being modified, making everything else within a system worse off. This most commonly takes the form of suboptimization,[1]1 which is a term in systems science and cybernetics referring to instances where individual subcomponents or subsystems are optimized at the expense of an entire system.

Examples of suboptimization

The paradigmatic example of suboptimization that is often taught in business school case studies, involves organizations encouraging excessive competition between departments and business units. Some amount of competition within an organization is healthy; however, when unchecked, it effectively results in cannibalization, as departments must literally impede one another to fight for a fixed set of resources. In scenarios like this, when a department wins, success will generally come at the expense of other departments and the organization as a whole, rather than driving the company towards its broader objectives.

Suboptimization example 1: Sears is wrecked from within

This is a lesson illustrated (but probably not learned) by Eddie Lampert, former CEO of Sears and largest stakeholder in the brand. In 2008, Lampert, then just Sears’ chairman, oversaw a strategy that eventually spun the company into 40 separate divisions with their own profit and loss (P&L). This decision was inspired by Lampert’s time as a hedge fund manager, with him viewing Sears as a portfolio of brands and services instead of a single retailer. An unabashed Ayn Rand enthusiast, he justified the move by pointing to competition as the key feature that makes markets successful at propagating useful information for business decision-making.

Because Sears was a retailer and not a hedge fund portfolio, business units were blocked by other units’ decisions. For example, when the president of retail services wanted to reduce the price of groceries in-store to compete with Walmart and Target, he couldn’t get agreement because this might have reduced the profits of related subdivisions. Lampert learned the hard way what any good capitalist should already know: firms exist to reduce transaction costs to business. Incorporating functions in-house allows for information on costs facing the company to be known and more easily shared with decision-makers within the organization. With divisions entering in and out of bitter feuds, no one wanted to talk to anyone. So, while individual Sears departments might have made optimal decisions for managing their own P&L, this would often increase losses elsewhere and thus increase costs for Sears as a whole.

As the principle of suboptimization states, independently optimizing each subsystem will not generally lead to a global system optimum and might worsen the overall system.

Suboptimization example 2: Contagious cancers

Emergent optimization processes, like evolution, are also not immune from illustrating some form of suboptimization.

Canine transmissible venereal tumor (CTVT), or Sticker’s sarcoma, is a cancer cell from some dog that died about 4,000 to 8,000 years ago that has escaped its host and gone on to travel the world, infecting dogs on every continent. Dogs aren’t the only organism whose species has produced transmissible cancers like this, but given that neither Tasmanian devils nor clams are cute, I suspect these cancers get less attention.

Natural selection is not an intentional, goal-oriented process, but from what we’ve observed, it’s fair to say that it appears to optimize local fitness across a population.[2]2 That is to say, when possible, this process, statistically speaking, tends to create the largest possible pool of individuals best suited for the environment that they’re in. We rarely talk about this process at the level of individual cells within an organism. And yet, the cells in every multicellular organism are alive and accruing changes to their genetic material. Natural selection can opportunistically optimize or select for the fitness of anything with genetic information, including the cells in your body.[3]3

Right now, there’s a cat-and-mouse game going on inside you. Multicellular organisms like us have evolved dozens of sophisticated mechanisms to detect, correct, or remove genetic mutations that pile up within cells to prevent runaway cell growth. Should these fail, our immune system is perpetually on guard for cells that look like they’re going rogue.[4]4 This isn’t surprising, since natural selection can be said to optimize for fitness across a population. Evolution couldn’t do this if it produced organisms that spontaneously erupted into a mass of tumors and died. At the same time, though, the minute a cell acquires mutations or traits that allow it to bypass these biological checks, natural selection can occur, allowing this cell to go successfully rogue.

The story ends there in most cases—either the rogue cell is eradicated, or the organism dies. But in the case of CTVT and diseases like it, we’re shown that evolution is content optimizing for the success of an individual subcomponent whose innate tendency is not to improve fitness of a population. Evolution has instead ensured this organism, a tumor that is the last remnant of a long-dead dog, survives generation after generation at the expense of fitness to some dogs.[5]5

Mis-optimization examples: Misspecified goals, observation bias, Goodhart’s Law, and goal displacement

Some people familiar with systems science colloquially refer to mis-optimization as instances where a planner engaging in goal-directed optimization ends up optimizing the wrong thing entirely. Suboptimization can be considered a subset of mis-optimization, but I think it’s worth distinguishing both to fully highlight the various ways which goal-oriented optimization can go wrong.

When a planner sets out to intentionally optimize a system, it can be hard to know where to begin to apply modifications to produce intended outcomes. Often, metrics are used as proxies that indicate the direction in which a process should move. Metrics, however, are abstractions and might poorly reflect the aspects of an environment that a planner is actually trying to improve when designing or influencing a system.

Mis-optimization example: No Child Left Behind

In the United States, No Child Left Behind (NCLB) is one of the most criticized pieces of legislation in the past 20 years. The policy was so controversial and widely condemned that it was replaced with strong bipartisan support in 2015. This policy failed in part because the metrics it used to evaluate school performance were tightly correlated with poverty. Schools that didn't improve on metrics defined in the policy were penalized in ways that would potentially block them from receiving further support. In some cases, this created reinforcing doom spirals that ensured the worst-off schools were cut from the very resources they’d need to improve the metrics used to evaluate them. Arguably the most visible failure of NCLB was that it created a tendency for educators to “teach to the test” because test scores were so heavily weighted in schools’ performance evaluations.

“Teaching to the test” is such a highly visible example of how NCLB accomplished the opposite of what it was intended to do that it became associated with the policy in the popular imagination. This is despite the fact that tests were not the only metric used in academic assessments under the policy, and that NCLB had other notable failures. Teaching to the test is also the perfect encapsulation of Mammon at work. An entire blog’s worth of material could be written about NCLB, so without going into excessive detail, the main context needed to understand the setup that encouraged teaching to tests was that:

- Schools were on the hook to guarantee continuous improvement in math and reading proficiency test scores, year over year.

- Failure to meet these targets (whether realistic or not) led to steep penalties for schools.

- This created a strong incentive for school faculty to pour all resources into improving test scores alone since not much else mattered.

At its extreme, some students recall that their entire curriculum for a given year solely focused on content that would be featured on proficiency tests, with other aspects of their curriculum falling by the wayside. While there was a wide degree of variance in students’ experiences under NCLB, there’s no doubt that it incentivized short-term thinking. This encouraged some schools to try to avoid punishment by focusing on improving test scores to the detriment of everything else. There are a lot of lessons that can be learned from the bizarre, perverse incentives created by NCLB that apply to other domains where optimization is being pursued:

1. Be careful what you ask for: Clearly specify goals to avoid goal misspecification. One of the clearest lessons illustrated by NCLB is that your goals and the objectives you establish to accomplish your goals can diverge. NCLB set out to improve educational achievement gaps across different student populations, a laudable goal. However, by anchoring the achievement of outcomes to test scores, NCLB became a policy that optimized for improving test scores instead of improving student outcomes more broadly. Test scores, however, aren’t the same thing as student performance, and designing a more thoughtful policy would require identifying metrics that better correlate to this or creating nuanced ways to measure student performance with tests.

2. What you measure matters: Metrics versus objectives and observation bias. There’s a common quote in business management circles that’s wrongly attributed to Peter Drucker stating, “what gets measured gets managed.” It highlights the importance of building good metrics to improve processes. This is actually a truncation of a longer quote by V F Ridgway in a paper titled “Dysfunctional Consequences of Performance Measurements”[6]6 that is far more nuanced. In his paper, Ridgway states:

What gets measured gets managed - even when it’s pointless to measure and manage it, and even if it harms the purpose of the organization to do so.

Simply because you can measure something doesn’t mean it accurately captures what you’re trying to improve or change about an environment or system. In the case of NCLB, the emphasis on measuring outcomes with math and reading standardized test performance displaced focus on critical thinking, creativity, social science and science performance, student attendance, student-teacher rapport, etc. These more abstract, and arguably more important, facets of education can’t as easily be measured as standardized test scores, and so in some schools and districts they fell to the wayside.

One of the most common ways that Mammon sneaks into the picture is when we engage in optimization processes that reduce the world to simple unidimensional metrics, whether this is intentional or not. It is crucial to avoid the trap of only optimizing what can be measured because this almost always comes at the expense of everything else that’s part of our complex reality.

3. Your objectives are always a moving target: Goodhart’s Law in action. In public policy and business management, there is a saying that is falsely attributed to economist Charles Goodhart stating:[7]7

When a measure becomes a target, it ceases to be a good measure.

Typically, this quote refers to the fact that when you explicitly select a measure to become your objective in environments where performance is evaluated, you incentivize undesirable outcomes as people unnaturally morph their behavior to suit the evaluation criteria. “Teaching to the test” is a straightforward illustration of how this can play out.

This tendency has been given the name Goodhart’s Law, and it points to how bad metrics can incentivize “gaming the system” in business or regulatory policy. While much emphasis is given to this adversarial version of Goodhart’s Law, I think a weaker version of Goodhart’s Law is at play in optimization processes that don’t involve shaping human behaviors. At its core, Goodhart’s Law highlights that when we initially begin to alter our environment, our environment changes, and so mindlessly optimizing the same metric or variable indefinitely can lead to unintended outcomes. In the next section, we will discuss a phenomenon colloquially referred to as “overoptimization” that touches on this.

4. Ensure the long-term survival of your goals: Don’t let metrics displace goals. Ultimately, it’s very easy to let metrics displace the actual things we want to shape and improve, and we have to work hard to prevent this. It’s easier said than done, of course, but one starting recommendation is to embrace a pluralist stance toward defining and measuring success.

The right hand of Mammon: Overoptimization

Mammon’s left hand helps create wicked problems, making our intentions and the systems that actualize these intentions diverge from one another. But its right hand is even more fearsome. Even when systems behave as expected, sometimes Mammon will ensure we overshoot our goals. Physicist Max Tegmark spoke about this tendency for indefinite optimization to lead to diminishing returns:[8]8

If you take one goal and just optimize for it indefinitely, what basically happens is in the beginning, it’ll make things better. But if you keep going, at some point, it’s going to start making things worse. And then gradually it makes things really, really terrible.

Tegmark then goes on to talk about an analogy:

Suppose you want to go from here back to Austin (which is somewhere south of us) and you optimize for going south. You’ll get closer and closer to Austin. But eventually, you’ll start getting away from it again.

Antibiotics: When left and right hands meet (Final example)

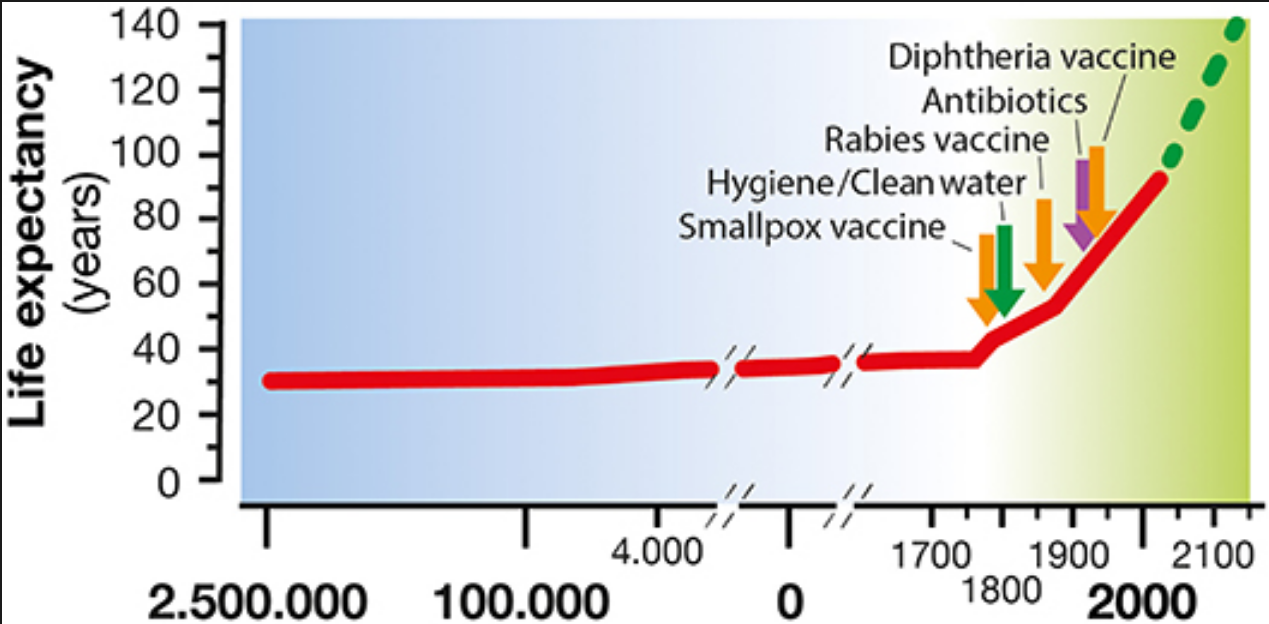

To make the chaos of optimization gone awry more tangible, I’m going to talk about yet another example: the invention of antibiotics, which are arguably the most important category of drugs ever created. Before the 1940s, infectious disease accounted for most of the mortality rate worldwide, and“life expectancy was less than half of what it is today.[9]9

Fast-forward to today, however, and we have a problem. In 2019 alone, an estimated 4.95 million people died with or from antimicrobial-resistant bacteria.[11]11 This number is expected to increase in the coming years, perhaps reaching as high as 10 million by 2050.[12]12 Simultaneously, the discovery of novel antibiotics has plummeted since the 1970s, at a time when we need to increase the rate of antibiotic discovery.

Overoptimization and antibiotics

Complex issues like the growth of antibiotic-resistant infections illustrate the degree to which Mammon’s left and right hands shape the challenges we collectively face today.

One of the key reasons we face growing antibiotic resistance is that antibiotics have been increasingly overused, especially in agriculture. Between antibiotic usage in humans and farm animals, over 100,000 tons are used yearly.[13]13 While antibiotics help treat disease, some amount of antibiotics administered to humans and animals end up back in the environment, which builds up resistance to these drugs in bacteria populations over time.[14]14 This indicates we’re using more than the amount needed to manage global diseases.

Suboptimization and mis-optimization with antibiotics

Besides overuse, the more fundamental issue with antibiotics is how they treat disease. While curing disease is an admirable goal, this entire blog post has been filled with examples of how human intentions diverge from the behavior of the systems we’ve designed. Antibiotics are no exception. While these drugs serve as agents that inhibit bacterial growth, with a high-level of understanding of evolution, you can quickly understand why this isn’t how antibiotics actually work.

Without going into extensive detail about the science of antibiotics, it’s best to see these drugs functioning as agents that modify the environment of bacterial populations rather than as agents that cure disease directly. Within a single bacterium, most antibiotics disrupt the cellular processes that allow it to function. This effect is repeated across the entire susceptible population of bacteria and often includes bacteria that are neutral or even beneficial to us or to the broader ecosystem. Additionally, this imprecise targeting means that harmful bacteria can be missed. But even when antibiotics hit their mark, a bacterium can still survive an encounter with these drugs and go on to reproduce or share their genes through horizontal gene transfer (HGT).[15]15 This process is akin to deliberately selecting for bacteria that can withstand antibiotic-saturated environments through luck or function. It’s not much different from the artificial selection used in dog breeding or growing crops that have traits that we desire. Only in this case, this is an unintended side effect of a technology we developed.

You’ll notice that in this example, I talked about both hands of Mammon. While I’ve subdivided the other examples in this post into distinct instances of suboptimization, mis-optimization, and overoptimization, the truth is that, in the messy real world, it can be hard to disambiguate which of these are happening, as they can occur together. The intention of using these terms is not to be pedantic about the correct name for optimization failures but to provide a thoughtful framework to think about how systems work and how they can produce unintended consequences.

In the case of antibiotics, we’ve gone from treating disease within individuals to needing to manage a complex governance problem where the solution is to get everyone on board about controlling their usage of antibiotics to avoid polluting the environment with these substances and furthering the growth of antibiotic-resistant bacteria. Many of our technologies create a similar pattern, called a "progress trap," where the technology eventually reintroduces a second-order problem resembling the one it initially solved or creating a coordination problem that requires global governance to address.[16]16 We'll talk about this pattern in greater detail in future posts.

Summary

- Philosophers like Aristotle identified that wealth-getting or wealth-seeking had the ability to “displace” our other goals and values because it can easily be mistaken for an end unto itself.

- This tendency is not unique to wealth. It is very easy for measurable goals and metrics to displace the complex objectives they correspond to. "Mammon" is a metaphor that takes this insight, which ancient philosophers and theologians recognized in wealth, and applies it more broadly through concepts like optimization and Goodhart's Law.

- The metaphorical Mammon refers to instances where optimization processes lead to unintended consequences. Mammon is a deceptive deity whose aid comes at a steep price. That price is whatever we end up sacrificing to achieve the outcomes we pursue.

- Optimization is the process of modifying an environment or a part of an environment to better match desired criteria. This can involve deliberately designed systems. Optimization can also “emerge” through the interaction of components within an organic system. Examples include:

- Designed systems: Social media ad clicks or the throughput of water through a pipe.

- “Emergent” or organic optimization: Arguably, evolution.

- Optimization is the process of modifying an environment or a part of an environment to better match desired criteria. This can involve deliberately designed systems. Optimization can also “emerge” through the interaction of components within an organic system. Examples include:

- There are two ways in which “Mammon” optimization can go awry and create paradoxical consequences.

- The left hand of Mammon: Suboptimization and mis-optimization

- Suboptimization: a term in systems science referring to instances where individual subcomponents or subsystems are optimized at the expense of an entire system.

- Mis-optimization: Instances where a planner engaging in goal-directed optimization ends up optimizing the wrong thing entirely.

- E.g., Goodhart's Law, poorly designed metrics, and goal displacement.

- Example: “Teaching to the test” is the result of wanting to improve student retention of knowledge but solely focusing on one observable metric (test scores) to do so. The end result is that standardized test taking is improved but likely at the expense of actual learning

- E.g., Goodhart's Law, poorly designed metrics, and goal displacement.

- The right hand of Mammon: Overoptimization

- Overoptimization is when optimization is pursued past the point of diminishing returns.

- Suboptimization, mis-optimization, and overoptimization often occur together and can be seen in our greatest challenges.

- Example: Antibiotic-resistant bacteria. Antibiotics are often overused and overprescribed, even when they are beneficial.

- Antibiotics, especially older generations, are imprecise in how they target bacteria. Simultaneously, they kill helpful bacteria and end up in an environment where more bacterial populations are exposed to them, giving them opportunities to evolve resistance.

- The result is that antibiotics select for successive generations of bacteria are better adapted to resist them, and we have to keep inventing even more powerful, novel antibiotic agents while governing our current use.

- Example: Antibiotic-resistant bacteria. Antibiotics are often overused and overprescribed, even when they are beneficial.

- The left hand of Mammon: Suboptimization and mis-optimization

Recommended Reading

- Book: Thinking in Systems: A Primer by Donella Meadows

- Book: The Unaccountability Machine: Why Big Systems Make Terrible Decisions - and How The World Lost its Mind by Dan Davies

- Book: When More Is Not Better: Overcoming America’s Obsession with Economic Efficiency by Roger L. Martin

- Book: The Ingenuity Gap: Facing the Economic, Environmental, and Other Challenges of an Increasingly Complex and Unpredictable Future by Thomas Homer-Dixion

- Book: A Short History of Progress by Ronald Wright

- Paper: Asymptotic burnout and homeostatic awakening: a possible solution to the Fermi paradox? by Michael L. Wong and Stuart Bartlett

- Academic Paper: Value Capture by C. Thi Nguyen

- Academic Paper: Playing it Forward: Path Dependency, Progressive Incrementalism, and the “Super Wicked” Problem of Global Climate Change by Kelly Levin, Benjamin Cashore, Steven Bernstein, Graeme Auld

- I HATE this word because the prefix “sub” makes it ambiguous as to whether the word means “less than optimal” or “optimization of a subcomponent.” I’m using it as the latter, and this seems to be the preferred usage. Regardless, optimization of a subcomponent tends to lead to less than optimal outcomes for an entire system, so I suppose there's overlap between both meanings.

- Memes like “survival of the fittest” give the illusion that evolution is teleological, seeking to produce the absolute best organism. However, competition—a key selective pressure in natural selection—can be thought of as a blind test-giver, exposing a population to local conditions at random. It does not ensure fitness beyond what is necessary to survive in an organism’s current environment.

- There’s a somewhat uncommon view that evolutionary selection pressure works at the level of individual genes, not just on traits or organisms (See The Selfish Gene). On this view, living things are just capsules for the individual genes that drive their propagation. In any case, tumors illustrate suboptimization if you believe a tumor cell to be a component of a broader system, like the organism the tumor grew in.

- As we age, get exposed to carcinogens, or otherwise experience a failure in these systems, cancer is more likely to happen. Cancer in humans has been highly correlated with age. For example, in the US, about 80% of cancers occur in people 55 years and older. However, incidence rates of cancer are increasing in younger people, which I hope to cover in a future post.

- CTVT is still a cancer, so it can’t be too lethal, or it’d reduce the available population of dogs that it can reproduce in. Over time, the CTVT variants that end up surviving must be benign enough that they don’t significantly reduce a dog’s available lifespan, which is something scientists have observed.

- Ridgway, V. F. (1956). Dysfunctional consequences of performance measurements. Administrative Science Quarterly, 1(2), 240-247. https://www.jstor.org/stable/2390989

- As stated by anthropologist Marilyn Strathern in her 1997 paper titled “Improving Ratings: Audit in the British University System.” This would become the standard phrasing of Goodhart’s Law despite this not being Goodhart's original wording.

- While Tegmark is mainly concerned with AI, he identifies overoptimization across multiple domains, which I find to be the most interesting part of this clip.

- Adedeji, W. A. (2016). The treasure called antibiotics. Annals of Ibadan Postgraduate Medicine, 14(2), 56-57. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5354621/

- Sanitation (followed by improvements in nutrition) has been the largest driver of life expectancy in the past 175 years. Typically, graphs with life expectancy data have an inflection point or kink around 1850, when public health practices became more widespread. Nonetheless antibiotics have played a role in helping stabilize gains in life expectancy.

- Antimicrobial Resistance Collaborators. (2022). Global burden of bacterial antimicrobial resistance in 2019: a systematic analysis. The Lancet, 399 (10325), 629-655. https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(21)02724-0/fulltext

- This 10 million number came from the Review on Antimicrobial Resistance in 2014. But anticipated deaths from future harms can be challenging to estimate. More research will likely have to be done to fully quantify future costs.

- Pokharel, S., Shrestha, P., & Adhikari, B. (2020). Antimicrobial use in food animals and human health: time to implement ‘One Health’ approach. WHO South-East Asia Journal of Public Health, 9(2), 5-8. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7648983/

- In some cases up to 90% of antimicrobials might not be metabolized when consumed and end up in the environment when they leave our bodies. This happens in tandem with improper disposal, handling, or agricultural contamination, which results in antibiotic pollution, exposing bacteria populations to antibiotics and giving them a chance to build resistance.

- HGT allows for bacteria to acquire antibiotic resistance within the same generation. HGT and not traditional generation to generation gene transfer, has been a massive driver of resistance, with some antibiotics and antibacterial biocides being known to induce HGT.

- Problems like climate change can be subdivided into a series of governance problems like fossil fuel phase-outs, sustainable agriculture commitments, water, and pollution management. Similarly, solutions to climate change, like geoengineering, will likely require governance to deploy and maintain. I also hope to talk about this in future blog posts.